Introducing SafeLiShare's LLM Secure Data Proxy:

As enterprises increasingly rely on AI systems like Large Language Models (LLMs), safeguarding sensitive data has become critical. SafeLiShare has been at the forefront of this challenge by offering secure, scalable solutions for protecting assets, both in-memory and during compute operations, within hardware-based secure enclaves (Trusted Execution Environments, or TEEs). TEEs provide a robust, hardware-level security mechanism, ensuring data confidentiality during processing. However, despite the advanced protection that TEEs offer, their adoption remains limited across industries due to complexity and perceived high costs.

This adoption barrier has led SafeLiShare to recognize the need for more flexible solutions that can meet the needs of a broader spectrum of customers, without sacrificing data security. One such offering is the LLM Secure Data Proxy, a dynamic approach that leverages both software-defined secure enclaves and cleanroom technologies to enable confidential computing, secure data flow, and prevent unauthorized data access.

Enterprise Application: The Current State

In most enterprises, application environments consist of multiple user types and authentication layers. Here’s a simplified look at typical enterprise app components:

Real Users:

1. App Users – Interact with the UI, typically authenticated through identity providers like Okta or Azure AD.

2. ETL Users – Power users managing data ingestion and ETL (Extract, Transform, Load) workflows to prepare data for use.

Synthetic Users:

1. Service Users – For server-to-server communication, often running background tasks.

2. API Tokens – Used for external service integrations.

3. DB Users – Synthetic users with varied privileges for database access, ensuring appropriate data access levels for ETL and application systems.

Authentication & Authorization Challenges:

Enterprises use diverse authentication systems, but authorization (AuthZ) remains highly custom. Policies are scattered, embedded in apps, or handled through external systems, and this fragmentation makes it hard to standardize data access controls across environments.

Gaps in Authorization for RAG Applications

LLM-driven workflows (especially Retrieval-Augmented Generation, or RAG applications) present several gaps in security:

ETL users may have overly broad access to sensitive datasets.

Chunking and embedding processes used in LLMs may inadvertently expose sensitive data.

Vector databases, which store data chunks and embeddings, often lack centralized management, potentially leading to data leaks.

External LLM prompts may accidentally expose sensitive data during inference.

These gaps reveal the critical need for enhanced visibility, data protection, and enforcement mechanisms in AI environments.

Solving the Security Gaps:

Introducing LLM Secure Data Proxy

The LLM Secure Data Proxy offers a multifaceted approach to addressing the gaps in current enterprise security, particularly for RAG applications. Here’s how:

- Visibility into Data Systems

SafeLiShare’s solution provides enterprises with deep observability, offering insights into who (both real users and synthetic components) is accessing sensitive data. This visibility extends to each layer of the application, identifying which services, users, or components are interacting with critical data, such as vector databases, during the LLM workflow. - Identifying Data Access Violations

LLM Secure Data Proxy integrates with enterprise policy stores to ensure data access policies are enforced. By identifying violations early, organizations can prevent unauthorized access and ensure sensitive information is protected. - Data Leak Prevention

Data leakage, particularly involving Personally Identifiable Information (PII), is a significant risk in LLM processing environments. With SafeLiShare’s secure data proxy, sensitive data is inspected and controlled to prevent inadvertent exfiltration during external LLM interactions. - Data Access Policy Enforcement

Once violations are identified, SafeLiShare’s solution enforces strict access controls, ensuring that only authorized users and services can access sensitive data. This mechanism also works across the entire LLM chain, from data ingestion to prompt generation. - Secure Communication Flow

By creating a Software-Defined Secure Enclave, the LLM Secure Data Proxy protects data in transit during processing. While not as ironclad as a hardware-based TEE, it addresses a majority of concerns around data security, offering a flexible, cost-effective alternative for enterprises hesitant to adopt full TEE solutions. - Trusted Execution Environments (TEE)

For customers requiring the highest level of security, SafeLiShare continues to offer Hardware-based Secure Enclaves (TEEs), ensuring data protection even during compute operations. TEEs provide the strongest guarantee for runtime data confidentiality, essential for highly regulated industries.

The Path Forward

While hardware-based secure enclaves remain an essential part of the SafeLiShare offering, the LLM Secure Data Proxy presents an adaptive solution for enterprises at varying stages of confidential computing adoption. By providing observability, policy enforcement, and both software and hardware-based data protection options, SafeLiShare equips enterprises to protect sensitive data and mitigate security risks throughout the LLM workflow. Whether through cleanroom technology or flexible secure enclaves, SafeLiShare offers a comprehensive path to secure the future of AI-driven applications.

SafeLiShare’s LLM Secure Data Proxy isn’t just a response to current security gaps; it’s a forward-looking solution, ready to empower organizations as they adopt AI at scale.

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

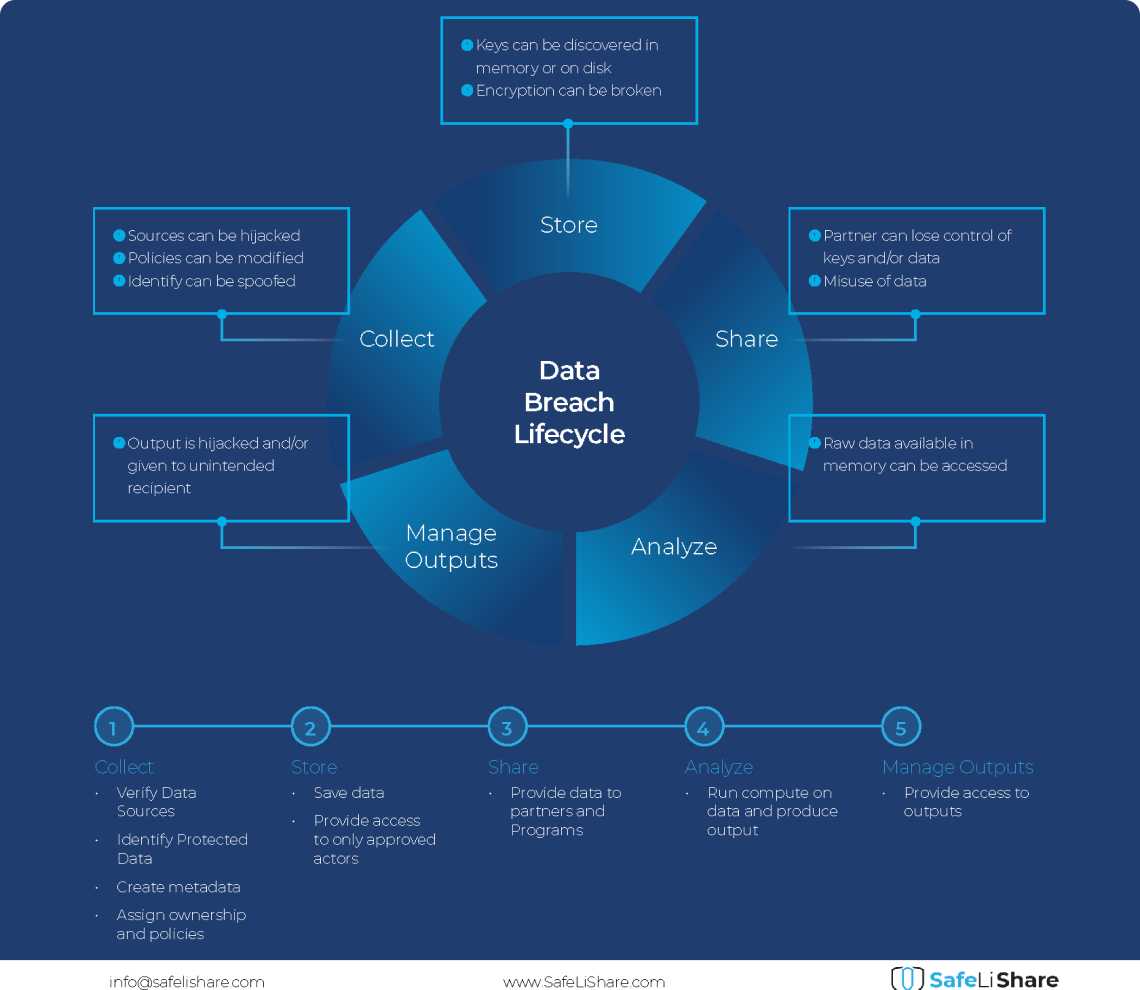

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.