OWASP Top 10 for LLMs

Large Language Models (LLMs) present exciting possibilities, but they also expose systems to a range of vulnerabilities as identified in the OWASP Top 10 for LLM applications. The LLM Secure Data Proxy (SDP), combined with Secure Enclave technology, provides an advanced solution to mitigate these risks, particularly through safeguarding, governance, and enforcement measures.

Addressing Prompt Injections

One of the most critical issues in LLMs is prompt injection, where malicious inputs manipulate LLM responses. Secure Enclave technology ensures that all prompts and completions undergo thorough inspection before being processed by the model. The LLM SDP can enforce stringent enterprise policies to validate inputs and detect suspicious patterns, preventing unauthorized actions and protecting data from being compromised.

Preventing Sensitive Data Disclosure

LLM outputs can inadvertently reveal sensitive information, leading to data leaks. Secure Enclaves govern the handling of outputs by enforcing security protocols that classify and control data before leaving the enclave. This governance ensures that confidential information remains protected and aligns with legal and compliance standards.

Combating Training Data Poisoning

When attackers compromise the training data of an LLM, they can influence model behavior. By isolating sensitive training data within Secure Enclaves, LLM SDP ensures training data poisoning attacks are prevented. The enclave’s secure environment limits unauthorized access and tampering, maintaining the integrity of the model.

Securing Plugin Vulnerabilities

LLM applications often integrate with external plugins, which may inadvertently introduce security risks such as remote code execution. By managing plugin execution within a Secure Enclave, LLM SDP controls access and limits plugin behavior to predefined, secure operations, ensuring the safety of LLM ecosystems.

Comprehensive Governance and Monitoring

Secure Enclave technology, combined with LLM SDP, facilitates centralized governance across the LLM lifecycle. This approach allows for real-time auditing, role-based access management, and compliance monitoring across multiple LLM touchpoints—from data ingestion to inference and result handling—thereby addressing vulnerabilities like over reliance on model outputs and model theft.

By integrating Secure Enclave as a Service with LLM SDP, enterprises can confidently deploy LLMs while ensuring their models and data are protected against the top vulnerabilities outlined by OWASP. This combination creates a secure, governed, and transparent environment for handling sensitive AI workloads at scale.

For a detailed understanding of the OWASP Top 10 vulnerabilities for LLM applications, you can refer to the official list on their website.

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

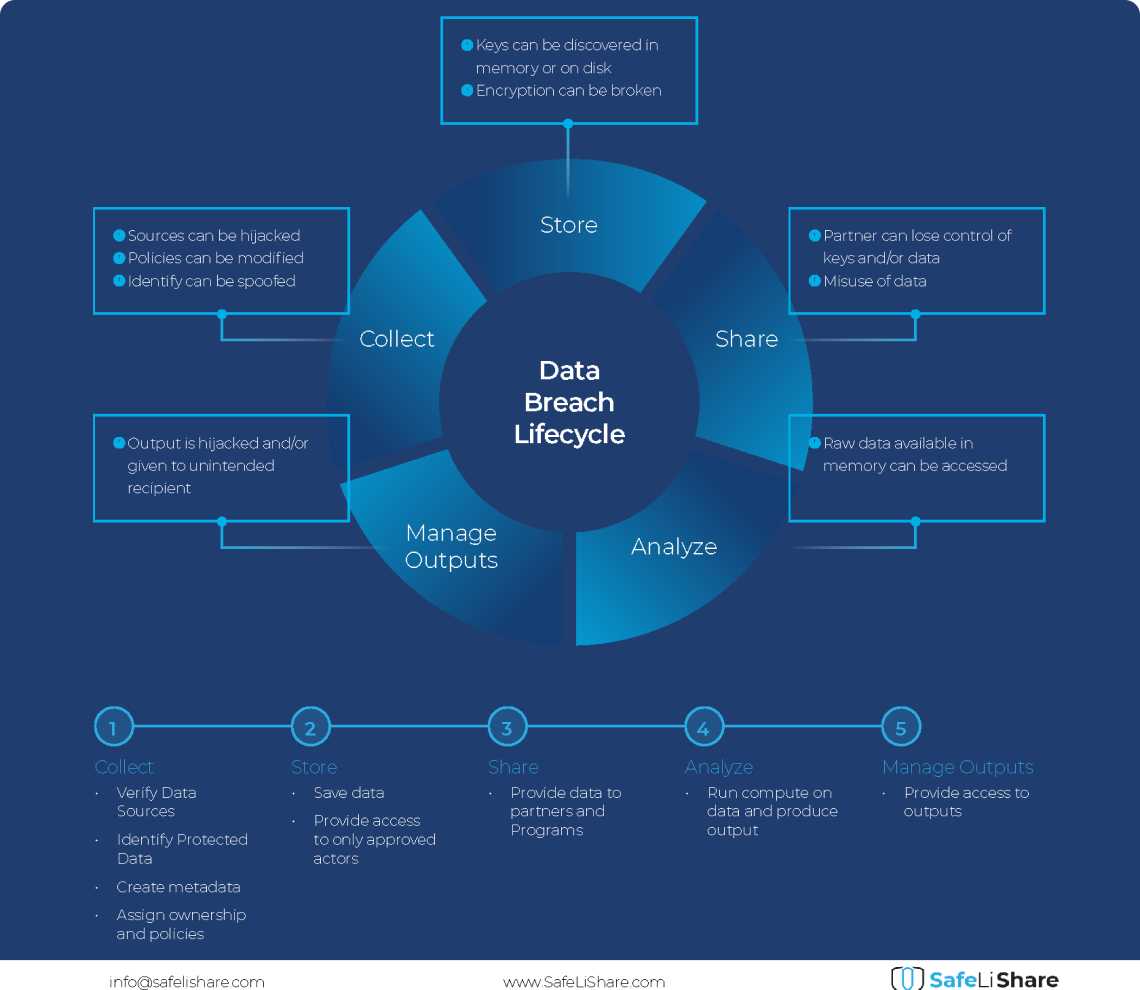

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.